Hey folks!

I'm using the latest Traefik v2 to provide ingress at ElfHosted - here is my helmrelease.

We have 20 nodes, and I'm running Traefik as a daemonset, so I have 20 Traefik pods. There are currently close to 4000 tenant pods in the cluster.

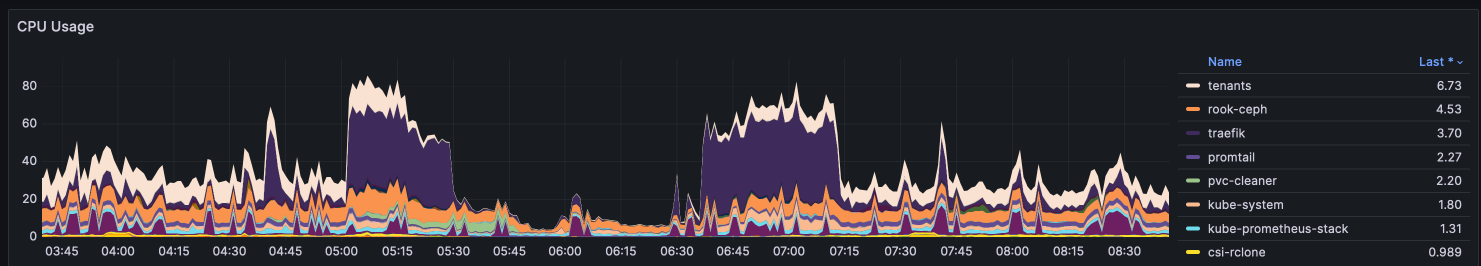

Recently when we shut down all 4000 pods for maintenance, Traefik's CPU load increased significantly during scale-down and scale-up:

AFAIK, each Traefik node has no awareness of the others, so presumably they're all hitting the kube-apiserver at once, refreshing themselves each time one of the ingressroutes changes?

Is this expected behaviour (the CPU load), am I doing this right, or is there a better way? ![]()

Thanks!

D