Here is my big ugly docker compose yaml:

(first one of 7 that I am trying this on)

version: '3.9'

services:

web:

image: perseushub/web:latest

build: ./nginx

container_name: web

expose:

- "80"

labels:

- "traefik.enable=true"

- "traefik.http.routers.web.rule=Host(`perseus.acumenus.net`)"

- "traefik.http.routers.web.entrypoints=websecure"

- "traefik.http.routers.web.tls.certresolver=myresolver"

- "traefik.http.services.web.loadbalancer.server.port=80"

shareddb:

image: perseushub/shareddb:latest

build: ./shared-db

container_name: shareddb

volumes:

- shareddb:/data/postgres

expose:

- "5432"

labels:

- "traefik.enable=true"

files-manager:

image: perseushub/files-manager:latest

build: ./files-manager

container_name: files-manager

expose:

- "10500"

environment:

- SPRING_PROFILES_ACTIVE=docker

depends_on:

- shareddb

labels:

- "traefik.enable=true"

user:

image: perseushub/user:latest

build: ./user

container_name: user

environment:

USER_ENV: Docker

env_file:

- user/user-envs.txt

expose:

- "5001"

depends_on:

- shareddb

labels:

- "traefik.enable=true"

backend:

image: perseushub/backend:latest

build: ./perseus-api

container_name: backend

environment:

PERSEUS_ENV: Docker

expose:

- "5004"

depends_on:

- shareddb

- files-manager

labels:

- "traefik.enable=true"

frontend:

image: perseushub/frontend:latest

build:

context: ./UI

args:

env: prod

container_name:

frontend

expose:

- "4200"

labels:

- "traefik.enable=true"

white-rabbit:

image: perseushub/white-rabbit:latest

build: ../WhiteRabbit

container_name:

white-rabbit

expose:

- "8000"

environment:

- SPRING_PROFILES_ACTIVE=docker

depends_on:

- shareddb

- files-manager

labels:

- "traefik.enable=true"

vocabularydb:

image: perseushub/vocabularydb:latest

build: ./vocabulary-db

container_name: vocabularydb

healthcheck:

test: [ "CMD", "pg_isready", "-q", "-d", "vocabulary", "-U", "admin" ]

timeout: 60s

interval: 30s

retries: 10

volumes:

- vocabularydb:/data/postgres

ports:

- "5431:5432"

labels:

- "traefik.enable=true"

cdm-builder:

image: perseushub/cdm-builder:latest

build: ../ETL-CDMBuilder

container_name:

cdm-builder

expose:

- "9000"

environment:

- ASPNETCORE_ENVIRONMENT=Docker

depends_on:

- shareddb

- files-manager

- vocabularydb

labels:

- "traefik.enable=true"

solr:

image: perseushub/solr:latest

build: ./solr

container_name: solr

expose:

- "8983"

volumes:

- solr:/var/solr

depends_on:

- vocabularydb

labels:

- "traefik.enable=true"

athena:

image: perseushub/athena:latest

build: ./athena-api

container_name: athena

environment:

- ATHENA_ENV=Docker

expose:

- "5002"

depends_on:

- solr

labels:

- "traefik.enable=true"

usagi:

image: perseushub/usagi:latest

build: ./usagi-api

command: python /app/main.py

container_name: usagi

environment:

USAGI_ENV: Docker

expose:

- "5003"

depends_on:

- shareddb

- solr

labels:

- "traefik.enable=true"

r-serve:

image: perseushub/r-serve:latest

build:

context: ../DataQualityDashboard/R

args:

prop: docker

container_name:

r-serve

expose:

- "6311"

depends_on:

- shareddb

labels:

- "traefik.enable=true"

data-quality-dashboard:

image: perseushub/data-quality-dashboard:latest

build:

context: ../DataQualityDashboard

container_name:

data-quality-dashboard

expose:

- "8001"

environment:

- SPRING_PROFILES_ACTIVE=docker

depends_on:

- shareddb

- files-manager

- r-serve

labels:

- "traefik.enable=true"

swagger:

image: perseushub/swagger:latest

build: ./swagger-ui

container_name: swagger

ports:

- "8081:8080"

labels:

- "traefik.enable=true"

networks:

perseus_default:

traefik-proxy:

volumes:

shareddb:

vocabularydb:

solr:

Here is the docker compose up output:

web | 2023/12/01 18:13:05 [error] 19#19: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 172.26.0.17, server: _, request: "GET /favicon.ico HTTP/1.1", upstream: "http://172.17.0.1:4200/favicon.ico", host: "perseus.acumenus.net", referrer: "https://perseus.acumenus.net/"

web | 172.26.0.17 - - [01/Dec/2023:18:13:05 +0000] "GET /favicon.ico HTTP/1.1" 502 559 "https://perseus.acumenus.net/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36" "73.175.74.146"

web | 172.26.0.17 - - [01/Dec/2023:18:13:05 +0000] "GET / HTTP/1.1" 502 559 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36" "73.175.74.146"

web | 2023/12/01 18:13:05 [error] 19#19: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 172.26.0.17, server: _, request: "GET / HTTP/1.1", upstream: "http://172.17.0.1:4200/", host: "perseus.acumenus.net"

web | 2023/12/01 18:13:05 [error] 19#19: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 172.26.0.17, server: _, request: "GET /favicon.ico HTTP/1.1", upstream: "http://172.17.0.1:4200/favicon.ico", host: "perseus.acumenus.net", referrer: "https://perseus.acumenus.net/"

web | 172.26.0.17 - - [01/Dec/2023:18:13:05 +0000] "GET /favicon.ico HTTP/1.1" 502 559 "https://perseus.acumenus.net/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36" "73.175.74.146"

web | 2023/12/01 18:13:05 [error] 19#19: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 172.26.0.17, server: _, request: "GET / HTTP/1.1", upstream: "http://172.17.0.1:4200/", host: "perseus.acumenus.net"

web | 172.26.0.17 - - [01/Dec/2023:18:13:05 +0000] "GET / HTTP/1.1" 502 559 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36" "73.175.74.146"

web | 2023/12/01 18:13:05 [error] 19#19: *1 connect() failed (111: Connection refused) while connecting to upstream, client: 172.26.0.17, server: _, request: "GET /favicon.ico HTTP/1.1", upstream: "http://172.17.0.1:4200/favicon.ico", host: "perseus.acumenus.net", referrer: "https://perseus.acumenus.net/"

web | 172.26.0.17 - - [01/Dec/2023:18:13:05 +0000] "GET /favicon.ico HTTP/1.1" 502 559 "https://perseus.acumenus.net/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36" "73.175.74.146"

backend | [2023-12-01 18:17:32,436] INFO: Running job "clear_cache (trigger: interval[0:15:00], next run at: 2023-12-01 18:17:32 UTC)" (scheduled at 2023-12-01 18:17:32.435476+00:00)

backend | [2023-12-01 18:17:32,436] INFO: Job "clear_cache (trigger: interval[0:15:00], next run at: 2023-12-01 18:17:32 UTC)" executed successfully

And here the dashboard screenshots of the service in question:

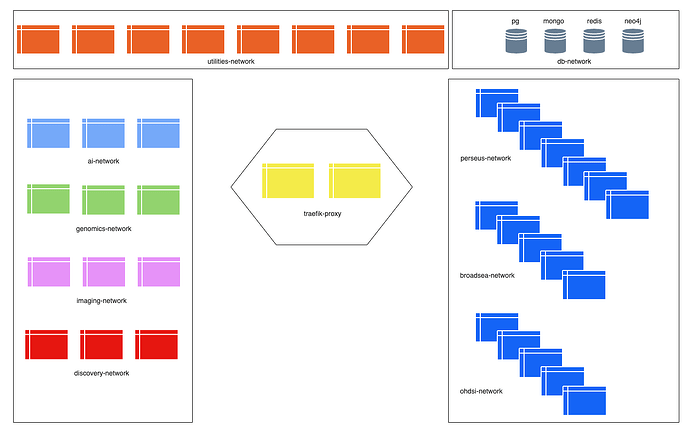

As the title of this post suggests, this is an attempt to consolidate RP across multiple stacks. This is being built for a non-profit consortium of medical outcomes researchers (about 2000+ around the world) as an application ecosystem demonstrator.

I have made significant progress on this, but tinkering will only get me so far. So any help and guidance would be greatly appreciated.