Hi there

so far I was able to get my own way with docker swarm and traefik but now I am running into walls since a few weeks so pls help me here.

I am running a Hetzner VPS as my external IP with a Tailscale VPN to my local lan within my Proxmox server (192.168.10.0/24).

On the VPS I only got the Tailscale and a nginx Proxy Manager(PM) (first time use, years before traefik only system) which is in charge of SSL Certificates. Also fine!

With this PM I can reach my Proxmox as well as the 192.168.10.0/24 lan.

On Proxmox I got three debian VM running, two worker, one manager running as docker swarm.

Overlay and macvlan successfully tested between all nodes.

Traefik is also running (dashboard is up and reachable) with a macvlan IP so that the VMs not really have to open any ports.

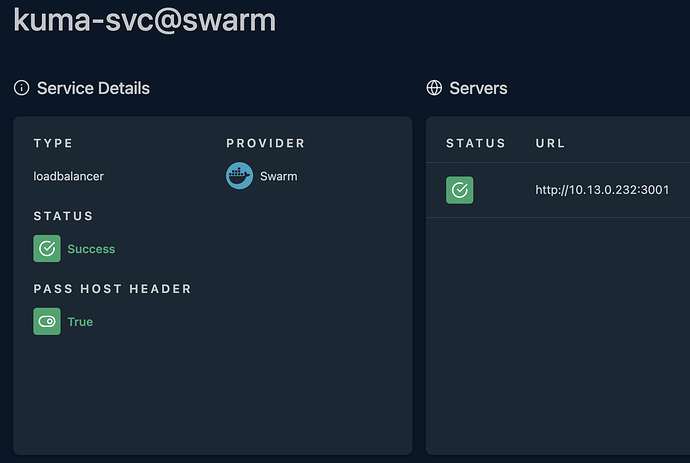

I got a kuma as dummy stack deployed via swarm provider and this is perfectly reachable.

Another service is running on another vm as file provider added, also fine.

But as soon as I want to run portainer I only get “502 Bad Gateway”.

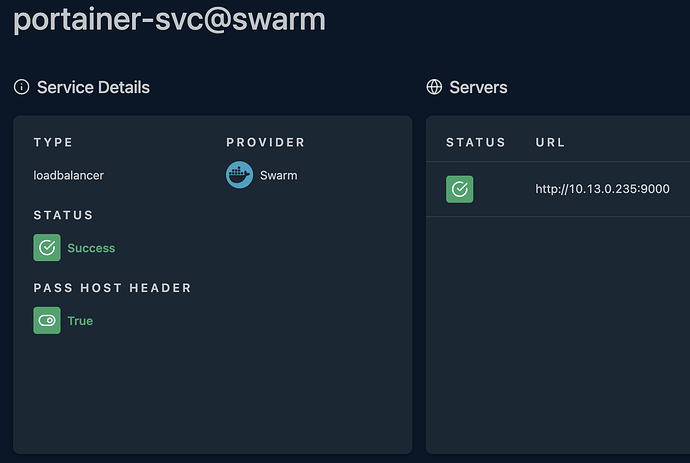

In the traefik dashboard the portainer entry is fine as configured in the label.

I have no idea but appreciate some help!

Thank you very much.

The Portainer log looks good:

2025/09/20 09:31PM INF github.com/portainer/portainer/api/cmd/portainer/main.go:325 > encryption key file not present | filename=/run/portainer/portainer

2025/09/20 09:31PM INF github.com/portainer/portainer/api/cmd/portainer/main.go:365 > proceeding without encryption key |

2025/09/20 09:31PM INF github.com/portainer/portainer/api/database/boltdb/db.go:133 > loading PortainerDB | filename=portainer.db

2025/09/20 09:31PM INF github.com/portainer/portainer/api/chisel/service.go:200 > found Chisel private key file on disk | private-key=/data/chisel/private-key.pem

2025/09/20 21:31:10 server: Reverse tunnelling enabled 2025/09/20 21:31:10 server: Fingerprint xxxxxx=

2025/09/20 21:31:10 server: Listening on http://0.0.0.0:8000

2025/09/20 09:31PM INF github.com/portainer/portainer/api/cmd/portainer/main.go:636 > starting Portainer | build_number=227 go_version=1.24.4 image_tag=2.33.1-linux-amd64 nodejs_version=18.20.8 version=2.33.1 webpack_version=5.88.2 yarn_version=1.22.22

2025/09/20 09:31PM INF github.com/portainer/portainer/api/http/server.go:367 > starting HTTPS server | bind_address=:9443

2025/09/20 09:31PM INF github.com/portainer/portainer/api/http/server.go:351 > starting HTTP server | bind_address=:9000

Those are my Configs.

traefik.yml Config:

---

# accessLog: {} # uncomment this line to enable access log

log:

level: debug # ERROR, DEBUG, PANIC, FATAL, ERROR, WARN, INFO

filepath: /data/traefik.log

maxSize: 1

maxBackups: 3

accessLog:

filePath: /data/access.log

bufferingSize: 100 # Configuring a buffer of 100 lines

filters:

statusCodes: 400-499

providers:

swarm:

exposedByDefault: false

endpoint: 'tcp://socket-proxy:2375' #'unix:///var/run/docker.sock'

network: cloud-public

file:

directory: /data/rules

watch: true

api:

dashboard: true # if you don't need the dashboard disable it

entryPoints:

web:

address: ':80'

# Teamspeak

speak-30033:

address: ':30033'

speak-10011:

address: ':10011'

speak-9987-udp:

address: ':9987/udp'

# MQTT

mqtt-1883:

address: ':1883'

mqtt-9001:

address: ':9001'

# Plex

plex-52945:

address: ':52945'

plex-32469:

address: ':32469'

# ??

32410-udp:

address: ':32410/udp'

32412-udp:

address: ':32412/udp'

32413-udp:

address: ':32413/udp'

32414-udp:

address: ':32414/udp'

5353-udp:

address: ':5353/udp'

global:

checkNewVersion: true

sendAnonymousUsage: true # disable this if you don't want to send anonymous usage data to traefik

serversTransport:

insecureSkipVerify: true

metrics:

prometheus:

addRoutersLabels: true

dynamic.yml /data/rules folder:

---

# set more secure TLS options,

# see https://doc.traefik.io/traefik/v2.5/https/tls/

tls:

options:

default:

minVersion: VersionTLS12

cipherSuites:

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305

- TLS_AES_128_GCM_SHA256

- TLS_AES_256_GCM_SHA384

- TLS_CHACHA20_POLY1305_SHA256

curvePreferences:

- CurveP521

- CurveP384

http:

# define middlewares

middlewares:

chain-no-auth:

chain:

middlewares:

- autodetectContenttype

- middleware-rate-limit

- middleware-secure-headers

chain-basic-auth:

chain:

middlewares:

- autodetectContenttype

- middleware-rate-limit

- middleware-secure-headers

- middleware-basic-auth

chain-authelia:

chain:

middlewares:

- autodetectContenttype

- middleware-rate-limit

- middleware-secure-headers

- middleware-authelia

chain-nextcloud:

chain:

middlewares:

- autodetectContenttype

- middleware-rate-limit

- nextcloud-middleware-secure-headers

- nextcloud-middleware-redirect-webfinger

- nextcloud-middleware-redirect-nodeinfo

- nextcloud-middleware-redirect-dav

# define some security header options,

# see https://doc.traefik.io/traefik/v2.5/middlewares/http/headers/

secHeaders:

headers:

browserXssFilter: true

contentTypeNosniff: true

frameDeny: true

stsIncludeSubdomains: true

stsPreload: true

stsSeconds: 31536000

customFrameOptionsValue: "SAMEORIGIN"

customResponseHeaders:

# prevent some applications to expose too much information by removing thise headers:

server: ""

x-powered-by: ""

autodetectContenttype: # needed for traefik v3 - see https://doc.traefik.io/traefik/v3.0/migration/v2-to-v3/

contentType: {}

middleware-basic-auth:

basicAuth:

realm: Traefik 3 Basic Auth

usersFile: /shared/passwd

middleware-rate-limit:

rateLimit:

average: 100

burst: 50

middleware-secure-headers:

headers:

accessControlAllowMethods:

- GET

- OPTIONS

- PUT

accessControlMaxAge: 100

hostsProxyHeaders:

- X-Forwarded-Host

sslRedirect: true

stsSeconds: 63072000

stsIncludeSubdomains: true

stsPreload: true

forceSTSHeader: true

customFrameOptionsValue: allow-from https:www.athome.zone

contentTypeNosniff: true

browserXssFilter: true

referrerPolicy: same-origin

featurePolicy:

camera 'none'; geolocation 'none'; microphone 'none'; payment

'none'; usb 'none'; vr 'none';

customResponseHeaders:

X-Robots-Tag: none,noarchive,nosnippet,notranslate,noimageindex

server: ""

middleware-authelia:

forwardAuth:

address: http://authelia:9091/api/verify?rd=https://authelia.some.domain/

trustForwardHeader: true

authResponseHeaders:

- Remote-User

- Remote-Groups

- Remote-Email

nextcloud-middleware-secure-headers:

headers:

accessControlMaxAge: 100

sslRedirect: true

stsSeconds: 63072000

stsIncludeSubdomains: true

stsPreload: true

forceSTSHeader: true

customFrameOptionsValue: SAMEORIGIN

contentTypeNosniff: true

browserXssFilter: true

referrerPolicy: no-referrer

featurePolicy:

camera 'none'; geolocation 'none'; microphone 'none'; payment

'none'; usb 'none'; vr 'none';

customResponseHeaders:

X-Robots-Tag: none

server: ""

nextcloud-middleware-redirect-dav:

redirectRegex:

permanent: true

regex: https://(.*)/.well-known/(card|cal)dav

replacement: https://${1}/remote.php/dav/

nextcloud-middleware-redirect-webfinger:

redirectRegex:

permanent: true

regex: https://(.*)/.well-known/webfinger

replacement: https://${1}/index.php/.well-known/webfinger/

nextcloud-middleware-redirect-nodeinfo:

redirectRegex:

permanent: true

regex: https://(.*)/.well-known/nodeinfo

replacement: https://${1}/index.php/.well-known/nodeinfo/

traefik.yml compose:

version: '3.8'

networks:

cloud-public:

external: true

cloud-socket-proxy:

external: true

cloud-edge:

external: true

traefik-macvlan:

external: true

services:

app:

image: traefik:latest

hostname: traefik

environment:

PGID: '1000'

PUID: '1000'

TZ: Europe/Berlin

logging:

driver: "local"

options:

max-file: "5"

max-size: "10m"

deploy:

resources:

limits:

cpus: '0.50'

memory: 128m

reservations:

cpus: '0.25'

memory: 64m

placement:

constraints:

- node.role == manager

restart_policy:

condition: on-failure

labels:

traefik.enable: 1

# define traefik dashboard router and service

traefik.http.routers.traefik.rule: Host(`traefik.some.domain`)

traefik.http.routers.traefik.service: api@internal

traefik.http.routers.traefik.entrypoints: web

traefik.http.routers.traefik.middlewares: chain-basic-auth@file

traefik.http.services.traefik.loadbalancer.server.port: 8080

volumes:

- /mnt/data/config/traefik-new/traefik.yml:/etc/traefik/traefik.yml

- /mnt/data/config/traefik-new:/data

- /mnt/data/documents/shared:/shared

networks:

cloud-socket-proxy:

cloud-public:

aliases:

- 'traefik'

cloud-edge:

aliases:

- 'traefik'

traefik-macvlan:

dns:

- 1.1.1.1

kuma.yml compose:

version: '3.8'

networks:

cloud-edge:

external: true

cloud-public:

external: true

cloud-socket-proxy:

external: true

services:

app:

image: louislam/uptime-kuma:latest

hostname: kuma

deploy:

restart_policy:

condition: on-failure

mode: replicated

replicas: 1

labels:

traefik.enable: 1

traefik.swarm.network: cloud-public

## HTTP Routers

traefik.http.routers.kuma-rtr.entrypoints: web

traefik.http.routers.kuma-rtr.rule: Host(`kuma.some.domain`)

## Middlewares

traefik.http.routers.kuma-rtr.middlewares: chain-no-auth@file

## HTTP Services

traefik.http.routers.kuma-rtr.service: kuma-svc

traefik.http.services.kuma-svc.loadbalancer.server.port: 3001

volumes:

#- /mnt/data/config/kuma:/app/data

- kuma:/app/data

networks:

cloud-edge:

cloud-public:

cloud-socket-proxy:

volumes:

kuma:

portainer.yml compose:

The edge.some.domain part within the label is new as a try from the portainer webside somewere but no success, normally I had only the prortainer label running.

version: '3.8'

networks:

cloud-public:

external: true

cloud-edge:

external: true

services:

agent:

image: portainer/agent:latest

hostname: portainer-agent

environment:

AGENT_CLUSTER_ADDR: tasks.agent

CAP_HOST_MANAGEMENT: 1

TRUSTED_ORIGINS: portainer.some.domain

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

- /:/host

deploy:

mode: global

placement:

constraints:

- node.platform.os == linux

resources:

# Hard limit - Docker does not allow to allocate more

limits:

cpus: '0.25'

memory: 512M

networks:

cloud-edge:

webapp:

hostname: portainer

image: portainer/portainer-ce:latest

command: -H tcp://tasks.agent:9001 --tlsskipverify

environment:

TRUSTED_ORIGINS: portainer.some.domain

deploy:

restart_policy:

condition: on-failure

mode: replicated

replicas: 1

placement:

constraints:

- node.role == manager

resources:

# Hard limit - Docker does not allow to allocate more

limits:

cpus: '0.5'

memory: 1024M

labels:

traefik.enable: 1

traefik.swarm.network: cloud-public

# define traefik dashboard router and service

traefik.http.routers.portainer.rule: Host(`portainer.some.domain`)

traefik.http.routers.portainer.service: portainer-svc

traefik.http.routers.portainer.entrypoints: web

traefik.http.routers.portainer.middlewares: chain-no-auth@file

traefik.http.services.portainer-svc.loadbalancer.server.port: 9000

# Edge

traefik.http.routers.portainer-edge.rule: Host(`edge.some.domain`)

traefik.http.routers.portainer-edge.middlewares: chain-no-auth@file

traefik.http.routers.portainer-edge.entrypoints: web

traefik.http.services.portainer-edge-svc.loadbalancer.server.port: 8000

traefik.http.routers.portainer-edge.service: portainer-edge-svc

networks:

cloud-public:

cloud-edge:

volumes:

- portainer-data:/data

volumes:

portainer-data: